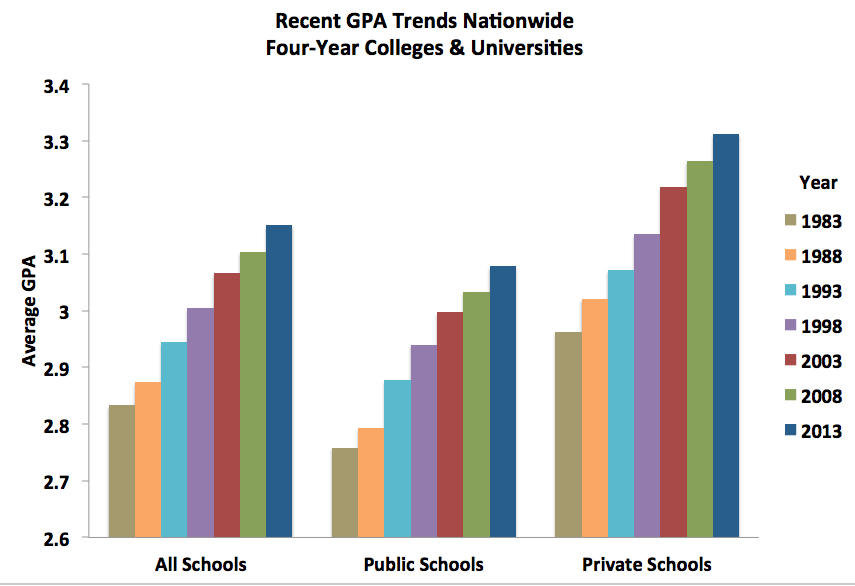

The figure above shows the average undergraduate GPAs for four-year American colleges and universities from 1983-2013 based on data from: Alabama, Alaska-Anchorage, Appalachian State, Auburn, Brigham Young, Brown, Carleton, Coastal Carolina, Colorado, Columbia College (Chicago), Columbus State, CSU-Fresno, CSU-San Bernardino, Dartmouth, Delaware, DePauw, Duke, Elon, Emory, Florida, Furman, Gardner-Webb, Georgia, Georgia State, Georgia Tech, Gettysburg, Hampden-Sydney, Illinois-Chicago. Indiana, Iowa State, James Madison, Kent State, Kenyon, Lehigh, Louisiana State, Miami (Ohio), Michigan, Middlebury, Minnesota, Minnesota-Morris, Missouri, Montclair State, Nebraska-Kearney, North Carolina, North Carolina-Greensboro, North Carolina-Asheville, North Dakota, Northern Arizona, Northern Iowa, Northern Michigan, Northwestern, Oberlin, Penn State, Princeton, Puerto Rico-Mayaguez, Purdue, Purdue-Calumet, Rensselaer, Roanoke, Rockhurst, Rutgers, San Jose State, South Carolina, South Florida, Southern Connecticut, Southern Utah, St. Olaf, SUNY-Oswego, Texas, Texas A&M, Texas State, UC-Berkeley, UC-San Diego, UC-Santa Barbara, Utah, Vanderbilt, Virginia, Wake Forest, Washington-Seattle, Washington State, West Georgia, Western Michigan, William & Mary, Wisconsin, Wisconsin-Milwaukee, Wisconsin-Oshkosh, and Yale.

Note that inclusion in these averages does not imply that an institution has significant inflation. Data on the GPAs for each institution where I don’t have a confidentiality agreement can be found at the bottom of this web page. Institutions comprising this average were chosen strictly because they have either published grade data or have sent recent data (2012 or newer) to the author covering a span of at least eleven years.

Last major update, March 29, 2016

Introduction

This web site began as the data link to an op-ed piece I wrote on grade inflation for the Washington Post, Where All Grades Are Above Average, back in January 2003. In the process of writing that article, I collected data on trends in grading from about 30 colleges and universities. I found that grade inflation, while waning beginning in the mid-1970s, resurfaced in the mid-1980s. The rise continued unabated at almost every school for which data were available. By March 2003, I had collected data on grades from over 80 schools. Then I stopped collecting data until December 2008, when I thought it was a good time for a new assessment. At that time, I started working with Chris Healy from Furman University. We collected data from over 170 schools, updated this website, wrote a research paper, collected more data the following year and wrote another research paper.

In late 2015, at the request of more than a few people, I decided to work with Chris Healy on another update. Chris has done the lion’s share of data collection. We now have data on average grades from over 400 schools (with a combined enrollment of over four million undergraduates). I want to thank those who have helped us by either sending data or telling us where we can find data. I also want to thank those who have sent me emails on how to improve my graphics. Additional suggestions are always welcome. Send them to me, Stuart Rojstaczer, at: fortyquestions at gmail.com.

The Two Modern Eras of Grade Inflation

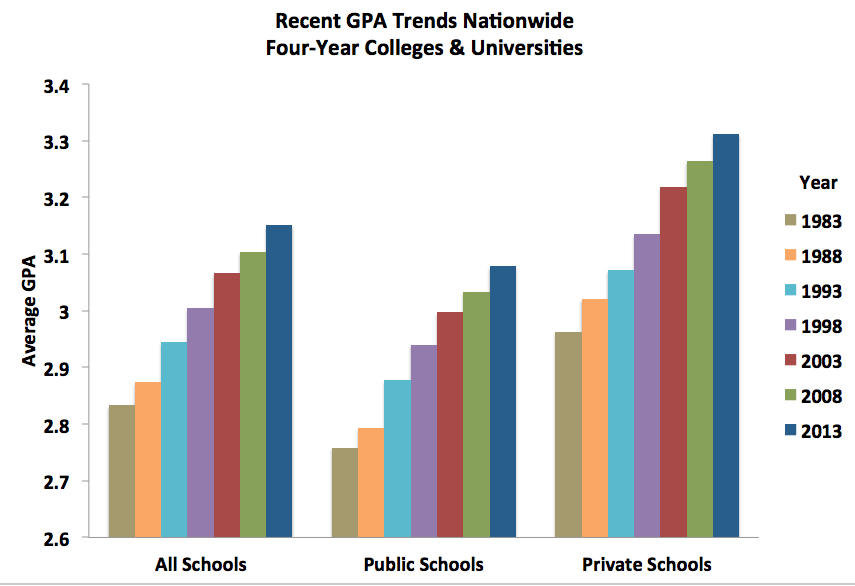

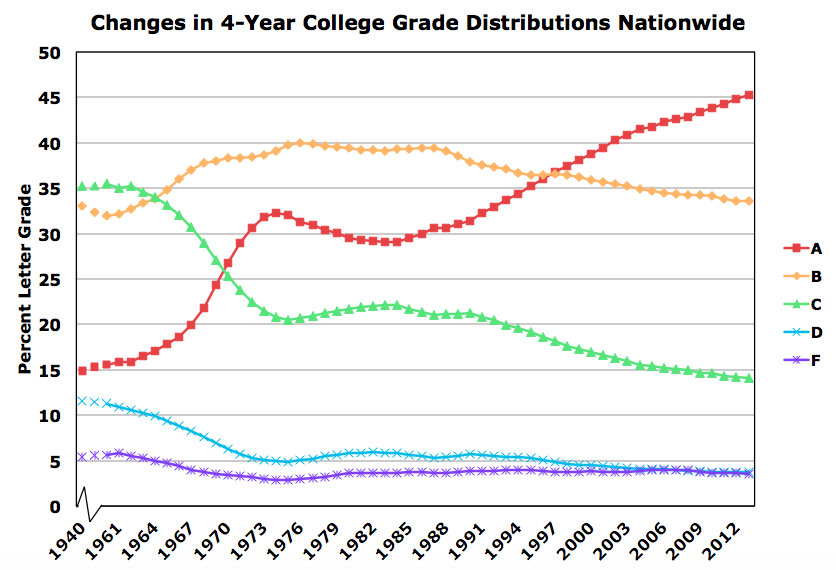

College grading on an A-F scale has been in widespread use for about 100 years. Early on, it was sometimes referred to as “scientific grading.” Until the Vietnam War, C was the most common grade on college campuses. That was true for over fifty years. Then grades rose dramatically. A’s became much more common (see figure below) and C’s, D’s and F’s declined (there’s more discussion of this topic at the end of this post) in popularity. GPA’s rose on average by 0.4 points. By 1973, the GPA of an average student at a four-year college was 2.9. A’s were twice as common as they were before the 1960s, accounting for 30% of all A-F grades.

Why did this happen? The reasons were complex. Here’s an attempt at a simplified explanation. Faculty attitudes about teaching and grading underwent a profound shift that coincided with the Vietnam War. Many professors, certainly not all or even a majority, became convinced that grades were not a useful tool for motivation, were not a valid means of evaluation and created a harmful authoritarian environment for learning. Added to this shift was a real-life exigency. In the 1960s, full-time male college students were exempt from the military draft. If a male college student flunked out, chances were that he would end up as a soldier in the Vietnam War, a highly unpopular conflict on a deadly battlefield. Partly in response to changing attitudes about the nature of teaching and partly to ensure that male students maintained their full-time status, grades rose rapidly. When the war ended so did the rise in grades. Perhaps the attitude shift of many professors toward grading needed the political impetus of an unpopular war to change grading practices across all departments and campuses. I call this period the Vietnam era of grade inflation.

The rise in college grades during the Vietnam War was well documented. In particular, one college administrator from Michigan State, Arvo Juola, collected annual average GPAs from colleges and universities across the country. This one-man undertaking well before the computer era was impressive. There were some people who maintained grades were rising in the Vietnam era because students in the 1960s and early 1970s were better than those over the previous fifty years, but the conventional wisdom was that those claims were unfounded.

At the end of the Vietnam era of grade inflation, Juola wrote a short and prescient paper that both documented the end of the era and warned against further inflation in the future. That “future” began ten years later.

In the early 1980s, college grades began to rise again, but at a slow and barely identifiable pace. By the late 1980s, GPA’s were rising at a rate of 0.1 points per decade (see top chart), a rate 1/4 of that experienced during the Vietnam era (the pace was so slow that until the 2000s it wasn’t entirely clear that it was a national phenomenon). A’s were going up by about five to six percentage points per decade. During that time, there was something else new under the sun on college campuses. A new ethos had developed among college leaders. Students were no longer thought of as acolytes searching for knowledge. Instead they were customers. Phrases like “success rates” began to become buzz phrases among academic administrators. A former university chancellor from the University of Wisconsin, David Ward, summed up this change well in 2010:

“That philosophy (the old approach to teaching) is no longer acceptable to the public or faculty or anyone else. . . . Today, our attitude is we do our screening of students at the time of admission. Once students have been admitted, we have said to them, “You have what it takes to succeed.” Then it’s our job to help them succeed.”

During this era, which has yet to end, student course evaluations of classes became mandatory, students became increasingly career focused, and tuition rises dramatically outpaced increases in family income. When you treat a student as a customer, the customer is, of course, always right. If a student and parent of that student want a high grade, you give it to them. Professors faced a new and more personal exigency with respect to grading: to keep their leadership happy (and to help ensure their tenure and promotion) they had to focus on keeping students happy. It’s not surprising that grades have gone up during this era. I call this period of grade inflation the “student as consumer era” or the “consumer era” for short.

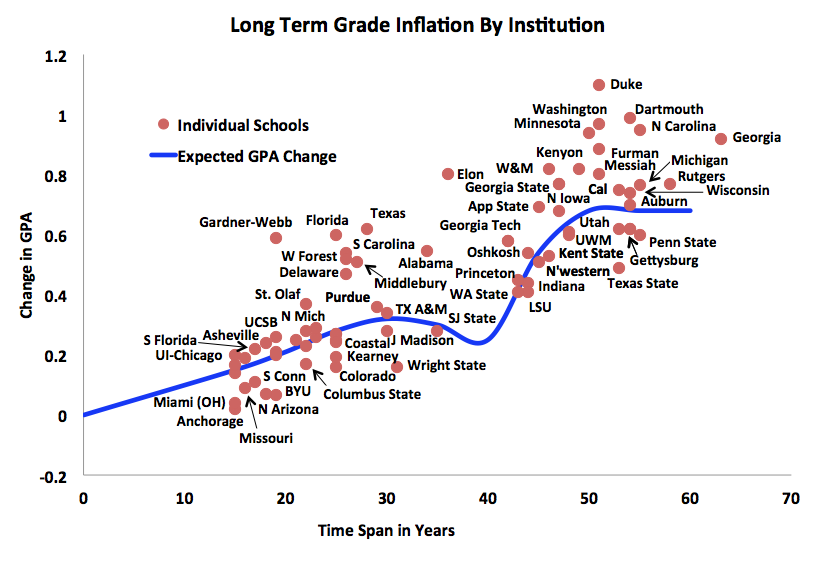

By the mid-to-late 1990s, A was the most common grade at an average four-year college campus (and at a typical community college as well). By 2013, the average college student had about a 3.15 GPA (see first chart) and forty-five percent of all A-F letter grades were A’s (see second chart). If you pay more for a college education in the consumer era, then you of course get a higher grade. By 2013, GPA’s at private colleges in our database were on average over 0.2 points higher than those found at public schools.

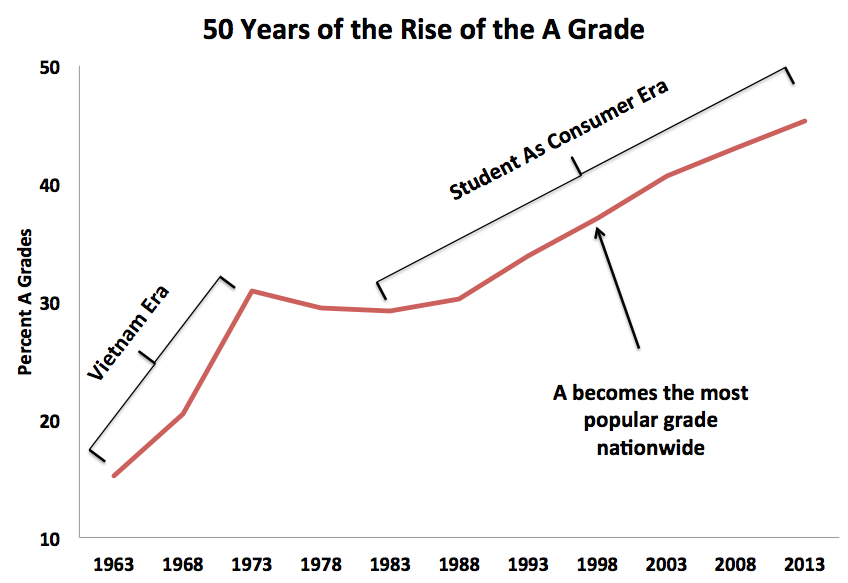

Long Term Trends

The above graphs represent averages. What about grade changes over the last fifty years at individual institutions? I can show those changes at most schools in our database. The figure below shows the amount of GPA rise for all schools where we have current data at least 15 years in length (and don’t have confidentiality agreements) and maps it to the number of years we have data for each school. The blue line is the expected amount of GPA rise a school would have if it were a garden-variety grade inflator. It’s essentially the percent A’s curve of the second figure in terms of GPA, flipped horizontally and then vertically.

In previous versions of this graph posted on this web site, the blue-line equivalent was a best-fit regression to the data. For those who liked the old blue-line (and I’ve seen the PowerPoint slides of college administrators who’ve used this graph and liked to use that line to compare their school’s grade inflation – which they usually publicly avow doesn’t exist – with national averages), the rate of grade inflation for the new dataset over the entire 50 years of college grade inflation (both the Vietnam era and the consumer era) averages 0.14 GPA points per decade, the same as it was in our previous update.

Some schools aren’t labeled because they cluster together and hug the blue line over the last 15 to 25 years: Brown, DePauw, Hampden-Sydney, Iowa State, Roanoke, Rensselaer, SUNY-Oswego, UC-San Diego, Virginia, West Georgia, and Western Michigan. CSU-San Bernardino almost completely overlaps UW-Milwaukee.

There are a small number of schools (about 15% of all schools in our database) that have experienced only modest increases in GPAs over the last 15 to 20 years, but most of them have average GPAs that already exceed 3.0. There are no schools in our dataset that have been untouched by rising grades over the last 50 years. Grades went up significantly at all schools in our database in both the Vietnam era and the first half of the consumer era. That said, a few schools have had modest to negligible recent grade rises (and rarely, modest drops in grades) and have relatively low GPAs, as will be discussed in the next section.

It’s worth looking at GPA rises at schools for which we have 50 years or more of data. These schools’ data show the full extent of both the Vietnam era rise and the consumer era rise up until 2012-15 (the years of our most current data for schools). The range in what these two periods of inflation combined have done to college grades is wide, but it is always significant. At Texas State, a historically low inflator, the average graduate’s GPA has migrated from a C+ to a B. At Duke, a high inflator, the average graduate’s GPA has migrated from a C+/B- to an A-.

Not all of the grade rises observed at these schools are due to inflation. At private schools like Duke and Elon and at public schools like Florida and Georgia, the caliber of student enrolled is higher than it was thirty or fifty years ago. But as is discussed three sections down, their rises in average GPA are mainly due to the same factor found at other schools: professors are grading easier year by year by a tiny amount.

The observed grade change nationwide in the consumer era is the equivalent of every class of 100 making two B students into B+ students every year and alternating between making one A- student into an A student and one B+ student into an A- student every year. It’s so incrementally slow a process that it’s easy to see why an individual instructor (or university administrator or leader) can delude himself into believing that it’s all due to better teaching or better students. But after 30 years of professors making these kinds of incremental changes, the amount of rise becomes so large that what’s happening becomes clear: mediocre students are getting higher and higher grades. It’s perhaps worth noting that if you strictly applied the above grading changes in a typical class of 100 at a four-year college today, you’d run out of B students to elevate to B+ students in about seven years.

Statements have been made by some that grade inflation is confined largely to selective and highly selective colleges and universities. The three charts above indicate that these statements are not correct. Significant grade inflation is present everywhere and contemporary rates of change in GPA are on average the same for public and private schools.

Recent Trends in Grade Inflation

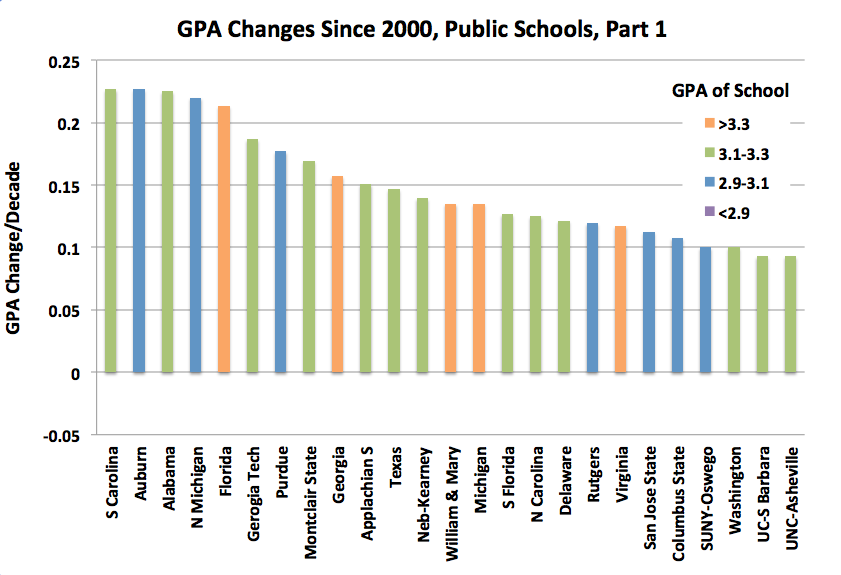

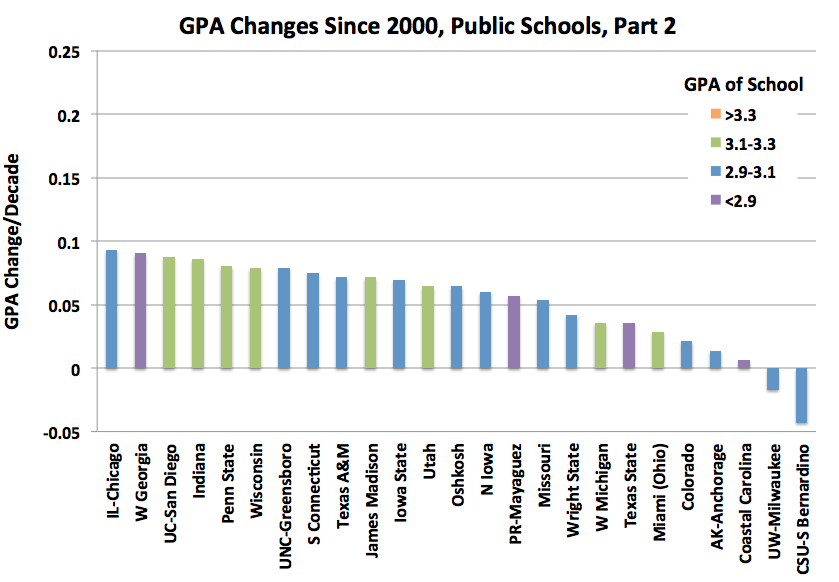

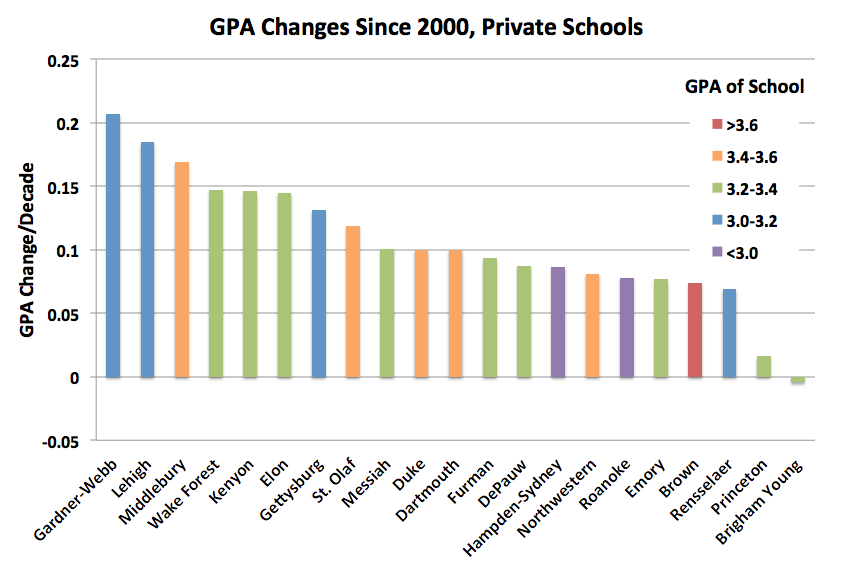

The charts below examine the magnitude of the rate of grade inflation for almost all of the institutions for which we have sufficient data to examine contemporary trends (some data, in particular data from private schools, comes attached with confidentiality agreements). The average GPA change since 2000 at both public and private schools is 0.10 points per decade, but the range is wide.

The two charts for public schools indicate that the tendency is for schools with high average GPAs to also have high rates of contemporary change and for schools with low average GPAs to continue to have low rates of change. Essentially, the gap keeps widening between the high and low GPA schools. Flagship state schools in the South have the highest contemporary rates of grade inflation for this sample of public schools. Historically, they had low GPAs and appear to be “catching up” to schools in the North. This was true for almost all of the Southern flagship schools in the 1990s as well.

Some schools that were relatively immune to grade inflation in the 1990s, such as University of Nebraska-Kearney and Purdue, have experienced significant consumer-era inflation in the 2000s. Recent inflation rates are relatively low at many flagship state schools in the Midwest. At about nine out of fifty schools, consumer era inflation has essentially ended at least temporarily. University of Colorado made a top-down decision to control grades and those efforts have had an effect on professors’ grading behavior. CSU-San Bernardino has become less selective in accepting students in response to budgetary pressures. Coastal Carolina and Texas State have relatively low GPAs and have been relatively resistant to grade inflation over the last 50 years. The reason for the negligible (and in one case negative) inflation rate at the other schools is unknown.

Private schools in our database, as noted in the text above and shown in the figure below, have higher GPAs than public schools. On average, inflation rates at private schools were higher in the 1990s than they were in the 2000s. Unlike with public schools, there is no correlation between GPA and contemporary inflation rates. There is less variability in inflation rate at private schools in comparison to public schools.

Two schools have had inflation rates that have been negligible when 2000 is used as the base year. At Brigham Young, GPAs have remained steady year after year. The situation at Princeton is more complex. An anti-inflation policy was implemented in the 2005 academic year. GPAs dropped by 0.05 points in 2005 and A’s were no longer the most popular grade. In 2014, that policy was abandoned. The reason for this abandonment was simple. As stated by Princeton’s new president, Christopher Eisgruber, the grading policy was “a considerable source of stress for many students, parents, alumni, and faculty members.” In other words, customers complained and the customer is always right. In 2014, average GPAs at Princeton popped back to about the same level as in 2002 and A became, once again, the most common grade.

Not shown on the graph (and not included in our estimate of a 0.10 rise per decade rise in GPA for private schools since 2000) because it’s an extreme outlier is Wellesley. In 2000, Wellesley had the highest average GPA in our database, 3.55. In 2003, Wellesley approved a grade deflation policy where the mean grade in 100-level and 200-level courses with 10 or more students was expected to be no higher than 3.33 (B+). GPAs dropped dramatically, down to 3.28 in 2005. No other school in our database (and I’m certain no school anywhere in the US) has had a drop or rise in GPA anywhere close to this size over a period of two years. Since then, average GPAs at Wellesley have crept back up at a rate of about 0.09 per decade, but were still in the B+ range as of 2014. The grade deflation policy of Wellesley essentially set its GPA clock back twenty years.

There are other private schools that have restricted high grades. For example, the average GPA of Reed College graduates hovered between 3.12 and 3.20 from 1991 and 2008 as a result of a school-wide grading policy. Whether average GPAs still hover within that range is unknown.

My attitude about these top-down clamps on grades (to be fair, Princeton’s past effort to deflate grades was not strictly top-down; the change was approved overwhelmingly by the faculty) is positive. Leadership nationwide created the incentives that caused A’s to become the most common grade. They need to be the ones to create incentives to bring back honest grading.

Some have made statements that grade inflation in the consumer era has been driven by the rise of adjunct faculty. But inflation rates are high at schools with low numbers of adjuncts. If anything, schools with high levels of adjunct faculty have experienced lower rates of consumer era grade inflation. Every instructor is inflating grades, whether they are tenure-track or not. The influence of adjunct faculty on grades has been overstated. I’ll get back to this point when I discuss grades at community colleges.

Grade Variation Between Disciplines and As a Function of School Selectivity

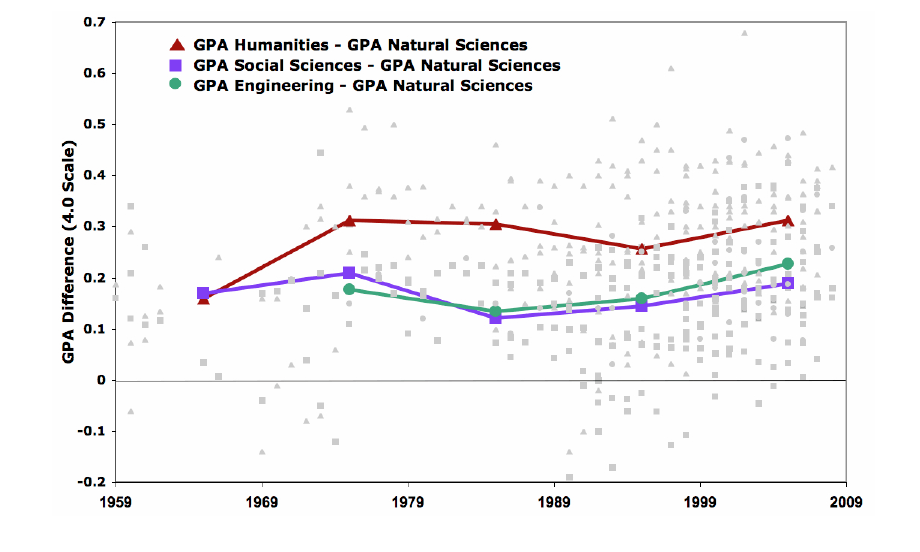

It is commonly said that there is more grade inflation in the sciences than in the humanities. This isn't exactly correct. What is true is that both the humanities and the sciences have witnessed rising grades since the 1960s, but the starting points for the rise were different. Below are data from our paper published in 2010. The gray dots represent GPA differences between major disciplines at individual schools. The colored lines indicate averages. The grading differential between the sciences and humanities has been present for over five decades. For those interested in such things, those in the social sciences - like true politicians - tend to grade between the extremes of the humanities and natural sciences.

What have sometimes changed are student attitudes about grade differences between disciplines. They used to be accepted with a shrug. My own personal observation is that students at relatively high-grading schools are so nervous about grades today - paradoxically this nervousness seems to increase with increased grade inflation - that the shrug sometimes turns into a panic. This “paradox” perhaps can be explained by the compression of grades at the top caused by grade inflation. For example, our dataset suggests that at a small number of private schools in the country solid A’s (and A+ grades) are so common that a GPA in excess of 3.75 is now required to achieve any level of graduation with honors. At those schools, an A- means being one step further away from receiving formal recognition as an outstanding student; a B+ can be “devastating.”

The increased nervousness of students about grades over the last thirty years can be overstated. Humanities majors and classes have become increasingly unpopular despite their nearly universally high grades. Students flock to economics despite its tendency to grade more like a natural science than a social science. The bottom line is that grading nearly everywhere is easy. After 50 plus years of grade inflation across the country, A is the most popular grade in most departments in most every college and university.

It is said that grade inflation is by far the worst in Ivy League schools. This isn't exactly correct. We discuss this issue at length in our 2010 and 2012 research papers. Grades are rising for all schools and the average GPA of a school has been strongly dependent on its selectivity since the 1980s.

Attempts to Relate Recent Grade inflation to Improved Student Quality and Other Factors

Some administrators and professors have tried to ascribe much of the increase in GPA in the consumer era to improvements in student quality. Almost all of these statements linking GPA to the presence of better students have been qualitative in nature. But there have been some attempts, notably at Duke, Texas and Wisconsin, to quantify this relationship using increases in SAT or ACT as a surrogate for increases in student quality.

Such quantitative efforts are of dubious worth because even the organization that administers the SAT test, the College Board, is unable to show that SAT scores are a good predictor of college GPA. A study by the University of California system of matriculates showed that SAT scores explained less than 14% of the variance in GPA. Bowen and Bok, in a 1998 analysis of five highly selective schools, found that SAT scores explained only 20% of the variance in class ranking. Their analysis also indicated that a 100-point increase in SAT was responsible for, at most, a 5.9 percent increase in class rank, which corresponds to roughly a 0.10 increase in GPA. This result matches that of Vars and Bowen who looked at the relationship between SAT and GPA for 11 selective institutions. McSpirit and Jones in a 1999 study of grades at a public open-admissions university, found a coefficient of 0.14 for the relationship between a 100-point increase in SAT and GPA.

In our 2010 Teachers College Record paper, we found, similar to Bowen and Bok and Vars and Bowen, a 0.1 relationship between a 100-point increase in SAT and GPA using data from over 160 institutions with a student population of over two million.

At both Texas and Duke, GPA increases of about 0.25 were coincident with mean SAT increases (Math and Verbal combined) in the student population of about 50 points. At Wisconsin, ACT increases of 2 points (the equivalent to an SAT increase of about 70 points) were coincident with a GPA rise of 0.21. The above mentioned studies indicate that student quality increases cannot account for the magnitude of grade inflation observed. The bulk of grade inflation at these institutions is due to other factors.

While local increases in student quality may account for part of the grade rises seen at some institutions, the national trend cannot be explained by this influence. There is no evidence that students have improved in quality nationwide since the early1980s.

The influence of affirmative action is sometimes used to explain consumer era grade inflation. However, much of the rise in minority enrollments occurred during a time, the mid-1970s to mid-1980s, when grade inflation waned. As a result, it is unlikely that affirmative action has had a significant influence.

Yet grades continue to rise.There is little doubt that the resurgence of grade inflation in the 1980s principally was caused by the emergence of a consumer-based culture in higher education. Students are paying more for a product every year, and increasingly they want and get the reward of a good grade for their purchase. Administrators and college leaders agree with these demands because the customer is always right. In this culture, professors are not only compelled to grade easier, but also to water down course content. Both intellectual rigor and grading standards have weakened. The evidence for this is not merely anecdotal. Students are highly disengaged from learning, are studying less than ever, and are less literate.

Internal university memos say much the same thing. For example, the chair of Yale's Course of Study Committee, Professor David Mayhew, wrote to Yale instructors in 2003, "Students who do exceptional work are lumped together with those who have merely done good work, and in some cases with those who have done merely adequate work." In 2001, Dean Susan Pedersen wrote to the Harvard faculty:

"We rely on grades not only to distinguish among our students but also to motivate them and the Educational Policy Committee worries that by narrowing the grade differential between superior and routine work, grade inflation works against the pedagogical mission of the Faculty....While accepting the fact that the quality our students has improved over time, pressure to conform to the grading practices of one's peers, fears of being singled out or rendered unpopular as a 'tough grader,' and pressures from students were all regarded as contributory factors...."

The Era of A Becoming Ordinary

In the Vietnam era, grades rose partly to keep male students from flunking out (and ending up being drafted into war). But the consumer era is different. It’s about helping students look good on paper, helping them to “succeed.” It’s about creating more and more A students. As the chart below (updated from our 2012 paper) indicates, B replaced C as the most common grade and Ds and Fs became less common in the Vietnam era. The consumer era, in contrast, isn’t lifting all boats. Ds and Fs have not declined significantly on average, but A has replaced B as the most common grade. As of 2013, A was the most common grade by far and was close to becoming the majority grade at private schools. America’s professors and college administrators have been promoting a fiction that college students routinely study long and hard, participate actively in class, write impressive papers, and ace their tests. The truth is that, for a variety of reasons, professors today commonly make no distinctions between mediocre and excellent student performance and are doing so from Harvard to CSU-San Bernardino.

Where Recent Grade Inflation Is Absent

While grade inflation is pervasive at America's four-year colleges and universities, it is no longer taking place everywhere. As noted above, grades have reached a plateau at a small, but significant number of schools (about 15 percent of the schools in our database). Will this plateau be long lived? Will other schools follow their lead? Both prospects aren’t likely. There are too many forces on these institutions to keep them resistant to the historical and contemporary fashion of rising grades. Administrators continue to be focused on satisfying their student customers. Some deans and presidents are concerned about educational rigor, but they do eventually leave and are not usually replaced with like-minded people. Witness what recently happened at Princeton as an example of this kind of change. Student course evaluations are still used for tenure and promotion. High school grades continue to go up, which makes new college students less and less familiar with non-A grades. Tuition continues to rise, which makes both students and parents increasingly feel that they should get something tangible for their money. It’s not surprising that schools with the highest tuition not only tend to have the highest grades, but have grades that continue to rise significantly.

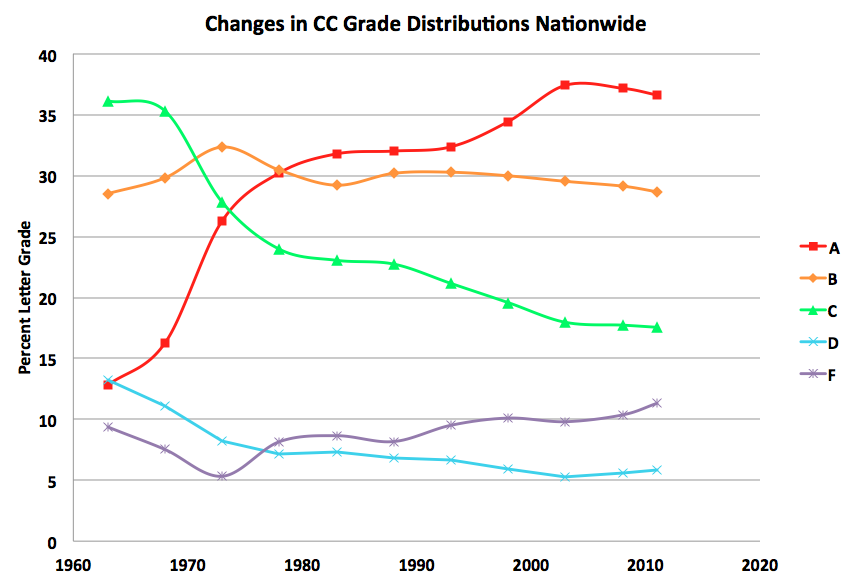

Where has the fashion of rising grades ended? I haven’t focused on data from community colleges, but Chris Healy has collected data from over one hundred of them. The data indicate that, at least when it comes to averages, grades have stopped rising at those schools. GPAs actually dropped on average by 0.04 points from 2002 to 2012. The national data in the chart below are in agreement with average grades published by the California Community Colleges System, which show a drop in grades in the 2000s. The average GPAs in our database over the time period 1995-2011 are identical to those from the CCC System, 2.75. That number may seem low in comparison to four-year college data, but it is similar to the average GPA of first-year and second-year students at a typical four-year public school. It’s actually about 0.1 points higher than the recent average GPAs of first-year and second-year students at a commuter university like UW-Milwaukee, which suggests that community colleges, relative to talent-level, are grading very generously even by contemporary standards.

The graph above was done in an admittedly slap-dash fashion. I’ve simply taken every data point Chris has collected, put it in a spreadsheet and plotted averages every five years (smoothed over a five year interval) from 1963 to 2008 and then added 2011 (to plot the most recent data for comparison).

A is the most common grade at community colleges. That transition occurred two decades earlier than it did at four-year schools. Vietnam era grade inflation produced the same rise in average GPA, 0.4 points. But the consumer era rise in average GPA is much more modest at community colleges and totals about 0.1 points (a rise to a 2.8 average GPA) at its peak. Then the percentage of A’s drops slightly over the last third of the consumer era for which we have data. Note that the percentage of Fs begins to rise at the end of the Vietnam era and that percentage more than doubles by 2011. Just like at four-year schools, A’s and B’s are unrealistically common at community colleges. But grade rises ended over a decade ago at two-year schools nationally (of course there are exceptions to this average behavior) and at schools in the California Community Colleges System.

Adjunct teaching percentages are high at these schools, administrators treat students as customers at these schools, and student course evaluations are important at these schools, but grades declined in the 2000s. Why? I don’t know, but because this is a web post, I feel comfortable to speculate. One factor may be that tuition is low at these schools, so students don’t feel quite so entitled. Another factor may be that community college students come, on average, from less wealthy homes, so students don’t feel quite so entitled. The mostly steady rise of F grades since the end of the Vietnam era suggests that the overall quality of students at community colleges has been in a steady decline for decades. Perhaps no amount of consumerism can make up for a student population that is increasingly unprepared for college work or doesn’t show up.

Note on Sample Changes Over Time

The general trends seen in our latest update are identical to those in our previous updates. It is a limitation of our work that we can’t sample the same institutions every time. These are not easy data to find or get in the quantities we need to make assessments. Universities and colleges that historically have given us data sometimes say no to new requests and we have to find other schools that will say yes (increasingly, this means that we have to agree to confidentiality agreements and can’t publicly display individual data). When schools that once publicly displayed data online stop doing so, we have to drop them from our database. We add new schools we find that have data online.

Despite this limitation, our numbers stay almost exactly the same with every sampling. Historical numbers on average percent A’s in this update are the same as those found in our 2012 paper (which had much more extensive data). Historical numbers on average GPAs for private schools in the latest update are all about one percent lower than found in previous updates. The fact that we are getting the same numbers (that agree with historical studies) with every update gives us confidence that our results not only accurately reflect trends in grading over time but also accurately measure average GPAs and average grade distributions for any year for which we have data.

Additional Contributions Wanted

If you have verifiable data on grading trends not included here, and would like to include it on this web site, please contact me, Stuart Rojstaczer. I will acknowledge your contribution by name or if you prefer, the data's origin will remain anonymous.

For More Information

For those interested in even more detail, here are some links to other material.

2012 research paper on grading in America, here.

2013 talking head interview about 2012 paper, here.

2010 research paper on grading in America, here.

New York Times Economix blog Q&A about grade inflation, here.

Grades gone wild (published in the Christian Science Monitor), here.

Original article that started it all (published in the Washington Post), here.

What else I do beside crunch grade numbers with Chris Healy once every five to seven years, here.

The Data Currently Available

The data presented here come from a variety of sources including administrators, newspapers, campus publications, and internal university documents that were either sent to me or were found through a web search. If you see any errors, please report them. Most of the data are at least several years in length.

Some of the data originated as charts. I digitized these charts using commercially available software. Some of the data were reported in terms of grade point average (GPA). A good deal of the data were in terms of percent grade awarded. I converted these data into GPA using formulae that I developed using data at other schools for which we have both GPA and grade distribution data or through direct calibration with limited data on GPAs at these institutions.

To obtain data on GPA trends, click on the institution of interest. Note that the data consist of two types, "GPA equivalent" and standard GPA. GPA equivalent is not the actual mean GPA of a given class year, but represents the average grade awarded in a given year or semester. GPAs for a graduating class can be expected to be higher than the GPA equivalent. When data sources do not indicate how GPAs were computed, I denote this as "method unspecified." All non-anonymous sources are stated on the data sheets. Some schools have given me data with the requirement that they be kept confidential.

Four-Year Schools

A

Adelphi, Alabama, Albion, Alaska-Anchorage, Allegheny, Amherst, Appalachian State, Arkansas, Ashland, Auburn

B

Ball State, Bates, Baylor, Boston U, Boston College, Bowdoin, Bowling Green, Bradley, Brigham Young, Brown, Bucknell, Butler

C

Carleton, Case Western, Central Florida, Central Michigan, Centre, Charleston, Chicago, Clemson, Coastal Carolina, College of New Jersey, Colorado, Colorado State, Columbia, Columbia (Chicago), Columbus State, Connecticut, Cornell, CSU-Fresno, CSU-Fullerton, CSU-Los Angeles, CSU-Monterey, CSU-Northridge, CSU-Sacramento, CSU-San Bernardino

D

Dartmouth, Delaware, DePauw, Drury, Duke, Duquesne

E

F

Florida, Florida Atlantic, Florida Gulf Coast, Florida International, Florida State, Francis Marion, Furman

G

Gardner-Webb, Georgetown, George Washington, Georgia, Georgia State, Georgia Tech, Gettysburg, Gonzaga, Grand Valley State, Grinnell

H

Hampden-Sydney, Harvard, Harvey Mudd, Haverford, Hawaii Hilo, Hawaii-Manoa, Hilbert, Hope, Houston

I

Idaho, Idaho State, Illinois, Illinois-Chicago, Indiana, Iowa, Iowa State

J

James Madison, John Jay, Johns Hopkins

K

Kansas, Kansas State, Kennesaw State, Kent State, Kentucky, Kenyon, Knox

L

Lafayette, Lander, Lehigh, Lindenwood, Louisiana State

M

Macalester, Maryland, Messiah, Miami of Ohio, Michigan, Michigan-Flint, Middlebury, Minnesota, Minnesota-Morris, Minot State, Missouri, Missouri State, Missouri Western, MIT, Monmouth, Montana State, Montclair State

N

Nebraska-Kearney, Nebraska, Nevada-Las Vegas, Nevada-Reno, North Carolina, North Carolina-Asheville, North Carolina-Greensboro, North Carolina State, North Dakota, Northern Arizona, Northern Iowa, North Florida, North Texas, Northwestern, NYU

O

Ohio State, Ohio University, Oklahoma, Old Dominion, Oregon, Oregon State

P

Penn State, Pennsylvania, Pomona, Portland State, Princeton, Puerto Rico-Mayaguez, Purdue, Purdue-Calumet

R

Reed, Rensselaer, Rice, Roanoke, Rockhurst, Rutgers

S

St. Olaf, San Jose State, Siena, Smith, South Carolina, South Carolina State, Southern California, Southern Connecticut, Southern Illinois, Southern Methodist, Southern Utah, South Florida, Spelman, Stanford, Stetson, SUNY-Oswego, Swarthmore

T

Tennessee-Chattanooga, Texas, Texas A&M, Texas Christian, Texas-San Antonio, Texas State, Towson, Tufts

U

UC-Berkeley, UCLA, UC-San Diego, UC-Santa Barbara, Utah, Utah State

V

Valdosta State, Vanderbilt, Vassar, Vermont, Villanova, Virginia, Virginia Tech

W

Wake Forest, Washington, Washington and Lee, Washington State, Washington University (St. Louis), Wellesley, Western Michigan, Western Washington, West Florida, West Georgia, Wheaton, Wheeling Jesuit, Whitman, William and Mary, Williams, Winthrop, Wisconsin, Wisconsin-Green Bay, Wisconsin-Milwaukee, Wisconsin-Oshkosh, Wright State

Y

Canadian Schools

Brock, Calgary, Lethbridge, Simon Fraser, Waterloo, Western OntarioApril 4, 2016 note: I do not provide average GPAs for schools not posted online.

April 13, 2016 update: Added all the individual public data for four-year American schools and updated Figure 3 and Figure 4 to include more recent data for three schools.

July 7, 2016 update: Added some Canadian schools and updated data for three four-year American schools.

.

gradeinflation.com, copyright 2002, Stuart Rojstaczer, www.stuartr.com, no fee for not-for-profit use